本篇将介绍 ELK 日志系统的搭建,我们将在一台机器上面搭建,系统配置如下:

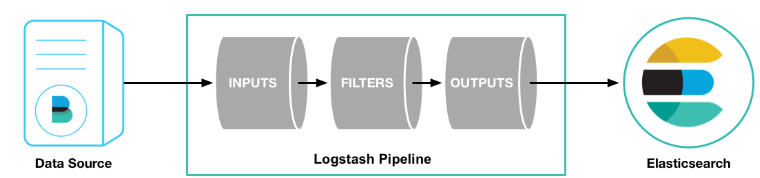

logstash的整体结构图如下:

我们将使用redis作为上图中的INPUTS,而elasticsearch作为上图中的OUTPUTS,这也是logstash官方的推荐。而它们的安装可以参考以下例子:redis的安装请参考:redis 的安装使用elasticsearch的安装请参考:elasticsearch 单节点安装

注意:elasticsearch、logstash、kibana它们的版本最好保持一致,这里都是使用6.4.0版本。

kibana的安装将在本篇的稍后介绍,下面先介绍下logstash的安装

首先,我们要将logstash安装包下载回来,可以在它的官网下载,当然,我们也可以从这里下载 logstash-6.4.0.tar.gz,推荐从logstash官网下载对应版本。

1 | $ cd /home/hewentian/ProjectD/ |

解压后,得到目录logstash-6.4.0,可以查看下它包含有哪些文件1

2

3

4

5

6$ cd /home/hewentian/ProjectD/logstash-6.4.0

$ ls

bin data lib logstash-core NOTICE.TXT x-pack

config Gemfile LICENSE.txt logstash-core-plugin-api tools

CONTRIBUTORS Gemfile.lock logs modules vendor

测试安装是否成功:以标准输入、标准输出作为input, output

1 | $ cd /home/hewentian/ProjectD/logstash-6.4.0/bin |

从上面的测试结果可知,软件安装正确,下面开始我们的定制配置。

配置文件放在config目录下,此目录下已经有一个示例配置,因为我们要将redis作为我们的INPUTS,所以我们要建立它的配置文件:1

2

3

4$ cd /home/hewentian/ProjectD/logstash-6.4.0/config

$ cp logstash-sample.conf logstash-redis.conf

$

$ vi logstash-redis.conf

在logstash-redis.conf中配置如下,这里暂未配置FILTERS(后面会讲到如何配置):1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25# Sample Logstash configuration for creating a simple

# Redis -> Logstash -> Elasticsearch pipeline.

input {

redis {

type => "systemlog"

host => "127.0.0.1"

port => 6379

password => "abc123"

db => 0

data_type => "list"

key => "systemlog"

}

}

output {

if [type] == "systemlog" {

elasticsearch {

hosts => ["http://127.0.0.1:9200"]

index => "redis-systemlog-%{+YYYY.MM.dd}"

#user => "elastic"

#password => "changeme"

}

}

}

在启动logstash前,验证一下配置文件是否正确,这是一个好习惯:1

2$ cd /home/hewentian/ProjectD/logstash-6.4.0/bin

$ ./logstash -f ../config/logstash-redis.conf -t

如果你见到如下输出,则配置正确:

Sending Logstash logs to /home/hewentian/ProjectD/logstash-6.4.0/logs which is now configured via log4j2.properties

[2018-09-30T16:32:45,043][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.queue", :path=>"/home/hewentian/ProjectD/logstash-6.4.0/data/queue"}

[2018-09-30T16:32:45,064][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.dead_letter_queue", :path=>"/home/hewentian/ProjectD/logstash-6.4.0/data/dead_letter_queue"}

[2018-09-30T16:32:46,030][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

Configuration OK

[2018-09-30T16:32:50,630][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

接下来,就可以启动logstash了:1

2$ cd /home/hewentian/ProjectD/logstash-6.4.0/bin

$ ./logstash -f ../config/logstash-redis.conf

如果见到如下输出,则启动成功:

[2018-09-30T16:34:44,175][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

下面进行简单的测试

我们首先,往redis中推入3条记录:1

2

3

4

5

6

7

8$ cd /home/hewentian/ProjectD/redis-4.0.11_master/src

$ ./redis-cli -h 127.0.0.1

127.0.0.1:6379> AUTH abc123

OK

127.0.0.1:6379> lpush systemlog hello world

(integer) 2

127.0.0.1:6379> lpush systemlog '{"name":"Tim Ho","age":23,"student":true}'

(integer) 1

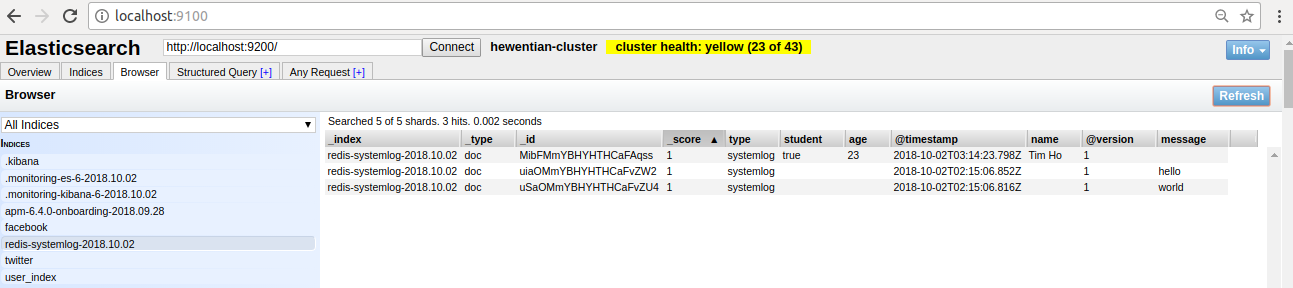

启动elastchsearch-head可以看到数据已经进入到es中了:

你会发现上面推到systemlog中的信息如果是JSON格式,则在elasticsearch中会自动解析到相应的field中,否则会放到默认的field:message中。

kibana的安装

kibana的安装很简单,将kibana安装包下载回来,可以在它的官网下载,当然,我们也可以从这里下载 kibana-6.4.0-linux-x86_64.tar.gz,推荐从kibana官网下载对应版本。

1 | $ cd /home/hewentian/ProjectD/ |

对kibana配置要查看的elasticsearch,只需修改如下配置项即可,如果是在本机安装elasticsearch,并且使用默认的9200端口,则无需配置。1

2

3

4

5

6

7

8$ cd /home/hewentian/ProjectD/kibana-6.4.0-linux-x86_64/config

$ vi kibana.yml

#修改如下配置项,如果使用默认的,则无需修改

#server.port: 5601

#elasticsearch.url: "http://localhost:9200"

#elasticsearch.username: "user"

#elasticsearch.password: "pass"

接着启动kibana:1

2$ cd /home/hewentian/ProjectD/kibana-6.4.0-linux-x86_64/bin

$ ./kibana # 或者以后台方式运行 nohup ./kibana &

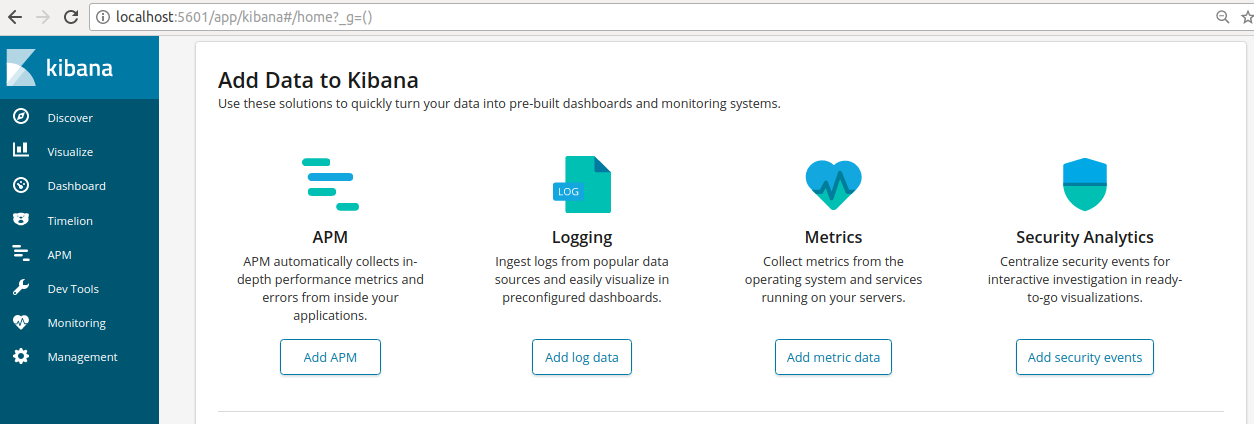

打开浏览器,并输入下面的地址:

http://localhost:5601

你将看到如下界面:

点击上图中的[Management]->[Index Patterns]->[Create index pattern],输入index name:redis-systemlog-*,如下图

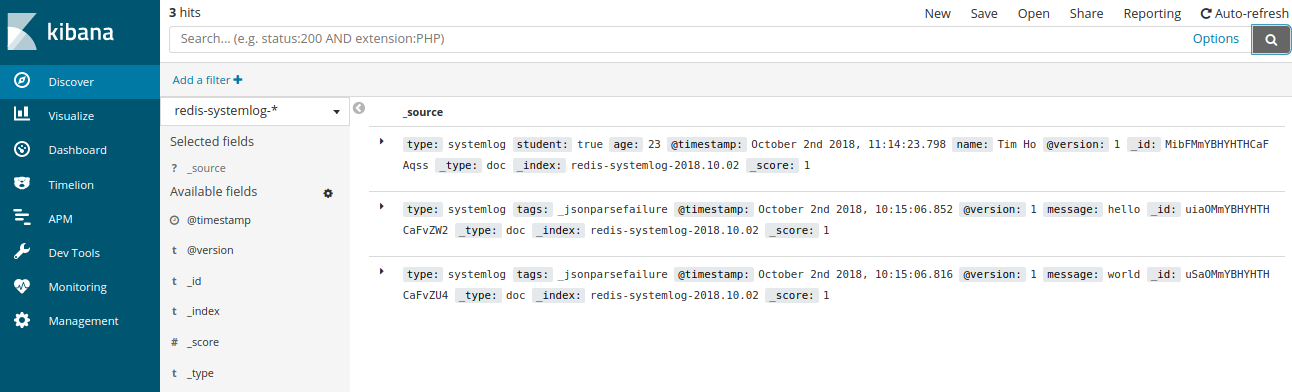

点击[Next step]按钮,并在接下来的界面中的Time Filter field name中选择I don't want to user the Time Filter,最后点击Create index pattern完成创建。接着点击左则的[Discover]并在左则的界面中选择中redis-systemlog-*,你将看到如下结果:

至此,简单的 ELK 基本搭建完毕。下面展示一个简单的配置示例:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35# Sample Logstash configuration for creating a simple

# Redis -> Logstash -> Elasticsearch pipeline.

input {

# system log

redis {

type => "systemlog"

host => "127.0.0.1"

port => 6379

password => "abc123"

db => 0

data_type => "list"

key => "systemlog"

codec => "json"

}

# user log

redis {

type => "userlog"

host => "127.0.0.1"

port => 6379

password => "abc123"

db => 0

data_type => "list"

key => "userlog"

codec => "json"

}

}

output {

elasticsearch {

hosts => ["http://127.0.0.1:9200"]

index => "%{type}-%{+YYYY.MM}"

}

}

下面我们将继续探索它的高级功能。

很多时候,对于systemlog中的某条信息(不一定是JSON格式),如果我们只需要某些信息,那我们又怎样做呢?这里就需要使用FILTERS了。

在FILTERS中使用grok正则表达式,关于grok,可以参见这里的说明:

https://www.elastic.co/guide/en/logstash/current/plugins-filters-grok.html

未完,待续……